Radar-video fusion

Get wide-area intrusion protection and reliable 24/7 detection with a fusion of two powerful technologies: video and radar. This unique device provides state-of-the-art deep learning-powered object classification for next-level detection and visualization.

For more context on the topic, please see Axis For Developers.

Before you start, we recommend that you read about radar as you will get data from that technology using AXIS Q1656-DLE.

In terms of integration, AXIS Q1656-DLE have two video channels and two analytics metadata streams corresponding to the video streams:

- One video stream is the same as AXIS Q1656-LE, but its metadata analytics stream will get input from both the radar and cameras detections and classifications (regardless of whether the information is available from both of them or not).

- The second video stream is from the radar sensor only, which visualizes radar analytics in a typical video stream. The second streams metadata analytics is a radar-based analytics metadata stream, which is similar to the conventional radar metadata stream structure. See radar for more information.

- AXIS Q1656-DLE has only got one real video channel (the other channel being the radar video channel), compared to the eight video channels in AXIS Q1656 (due to the possibility to create view areas). It is not possible to create multi-view areas on the AXIS Q1656-DLE. See the consideration section at the end of this guideline for more information.

- You can identify the radar stream via

param.cgicall.root.ImageSource.IX.Type=Radaris valid for the radar channel. In AXIS Q1656-DLE, it isroot.ImageSource.I1.Type=Radar. The normal video channels do not have a type parameter.

The following URLs are available:

- RTSP URL for camera channel with video and audio:

/axis-media/media.amp?camera=1&video=1&audio=1 - RTSP URL for radar channel with video and audio:

/axis-media/media.amp?camera=2&video=1&audio=1 - RTSP URL for analytics radar-video fusion metadata stream for the camera channel:

/axis-media/media.amp?camera=1&video=0&audio=0&analytics=polygon

Subscribe to the radar-video fusion metadata stream

- The user can subscribe to the radar-video fusion metadata stream, starting from AXIS OS 11.2.

- A feature flag should be enabled on the camera to start receiving the fused combination of the metadata stream from both technologies.

- Starting from AXIS OS 11.5, the feature will be enabled by default and there will not be a need to enable the flag. See AXIS OS release notes for future updates.

The feature flag is available from AXIS OS 11.2. Enabling the feature flag will not be needed starting from AXIS OS 11.5 (Scheduled for May 2023).

You can enable the flag by sending the following request:

- curl

- HTTP

curl --request POST \

--anyauth \

--user "<username>:<password>" \

--header "Content-Type: application/json" \

"http://<servername>/axis-cgi/featureflag.cgi" \

--data '{

"apiVersion": "1.0",

"method": "set",

"params": {

"flagValues": {

"radar_video_fusion_metadata": true

}

},

"context": " "

}'

POST /axis-cgi/featureflag.cgi

Host: <servername>

Content-Type: application/json

{

"apiVersion": "1.0",

"method": "set",

"params": {

"flagValues": {

"radar_video_fusion_metadata": true

}

},

"context": " "

}

Which returns the following response:

{

"apiVersion": "1.0",

"context": " ",

"method": "listAll",

"data": {

"flags": [

{

"name": "radar_video_fusion_metadata",

"value": true,

"description": "Include Radar Video Fusion in AnalyticsSceneDescription metadata.",

"defaultValue": false

}

]

}

}

To verify that it is enabled, send the following request:

- curl

- HTTP

curl --request POST \

--anyauth \

--user "<username>:<password>" \

--header "Content-Type: application/json" \

"http://<servername>/axis-cgi/featureflag.cgi" \

--data '{

"apiVersion": "1.0",

"method": "listAll",

"context": " "

}'

POST /axis-cgi/featureflag.cgi

Host: <servername>

Content-Type: application/json

{

"apiVersion": "1.0",

"method": "listAll",

"context": " "

}

Which returns the following response:

{

"apiVersion": "1.0",

"context": " ",

"method": "listAll",

"data": {

"flags": [

{

"name": "radar_video_fusion_metadata",

"value": false,

"description": "Include Radar Video Fusion in AnalyticsSceneDescription metadata.",

"defaultValue": false

}

]

}

}

Restart the device. The new fields in the Radar-Video Fusion metadata stream should be present. Switching the radar transmission off also changes the metadata stream (in real time).

- RTSP URL for analytics metadata stream for the radar channel:

/axis-media/media.amp?camera=2&video=0&audio=0&analytics=polygon - RTSP URL for the event stream is the same as all other Axis devices and it is valid for channels:

/axis-media/media.amp?video=0&audio=0&event=on

Metadata fields

AXIS Q1656-DLE is placed on the active track and will get the same capabilities of AXIS OS.

- Since it is fed by the combination of radar and camera, the main analytics working on this device is Axis Object Analytics

- If your customer require Video Motion Detection, they should use AXIS Q1656-LE instead

This is a sample frame with the new fields, marked below:

<?xml version="1.0" ?>

<tt:SampleFrame xmlns:tt="http://www.onvif.org/ver10/schema" Source="AnalyticsSceneDescription">

<tt:Object ObjectId="101">

<tt:Appearance>

<tt:Shape>

<tt:BoundingBox left="-0.6" top="0.6" right="-0.2" bottom="0.2" />

<tt:CenterOfGravity x="-0.4" y="0.4" />

<tt:Polygon>

<tt:Point x="-0.6" y="0.6" />

<tt:Point x="-0.6" y="0.2" />

<tt:Point x="-0.2" y="0.2" />

<tt:Point x="-0.2" y="0.6" />

</tt:Polygon>

</tt:Shape>

<tt:Color>

<tt:ColorCluster>

<tt:Color X="255" Y="255" Z="255" Likelihood="0.8" Colorspace="RGB" />

</tt:ColorCluster>

</tt:Color>

<tt:Class>

<tt:ClassCandidate>

<tt:Type>Vehical</tt:Type>

<tt:Likelihood>0.75</tt:Likelihood>

</tt:ClassCandidate>

<tt:Type Likelihood="0.75">Vehicle</tt:Type>

</tt:Class>

<tt:VehicleInfo>

<tt:Type Likelihood="0.75">Bus</tt:Type>

</tt:VehicleInfo>

<tt:GeoLocation lon="-0.000254295" lat="0.000255369" elevation="0" />

<tt:SphericalCoordinate Distance="40" ElevationAngle="45" AzimuthAngle="88" />

</tt:Appearance>

<tt:Behaviour>

<tt:Speed>20</tt:Speed>

<tt:Direction yaw="20" pitch="88" />

</tt:Behaviour>

</tt:Object>

<tt:ObjectTree>

<tt:Delete ObjectId="1" />

</tt:ObjectTree>

</tt:SampleFrame>

For video-only targets the metadata would still look like this:

<?xml version="1.0" ?>

<tt:SampleFrame xmlns:tt="http://www.onvif.org/ver10/schema" Source="AnalyticsSceneDescription">

<tt:Object ObjectId="101">

<tt:Appearance>

<tt:Shape>

<tt:BoundingBox left="-0.6" top="0.6" right="-0.2" bottom="0.2" />

<tt:CenterOfGravity x="-0.4" y="0.4" />

<tt:Polygon>

<tt:Point x="-0.6" y="0.6" />

<tt:Point x="-0.6" y="0.2" />

<tt:Point x="-0.2" y="0.2" />

<tt:Point x="-0.2" y="0.6" />

</tt:Polygon>

</tt:Shape>

<tt:Color>

<tt:ColorCluster>

<tt:Color X="255" Y="255" Z="255" Likelihood="0.8" Colorspace="RGB" />

</tt:ColorCluster>

</tt:Color>

<tt:Class>

<tt:ClassCandidate>

<tt:Type>Vehical</tt:Type>

<tt:Likelihood>0.75</tt:Likelihood>

</tt:ClassCandidate>

<tt:Type Likelihood="0.75">Vehicle</tt:Type>

</tt:Class>

<tt:VehicleInfo>

<tt:Type Likelihood="0.75">Bus</tt:Type>

</tt:VehicleInfo>

</tt:Appearance>

</tt:Object>

<tt:ObjectTree>

<tt:Delete ObjectId="1" />

</tt:ObjectTree>

</tt:SampleFrame>

For radar-only targets with no video history, the metadata would still look like this:

<?xml version="1.0" ?>

<tt:SampleFrame xmlns:tt="http://www.onvif.org/ver10/schema" Source="AnalyticsSceneDescription">

<tt:Object ObjectId="101">

<tt:Appearance>

<tt:Shape>

<tt:BoundingBox left="-0.6" top="0.6" right="-0.2" bottom="0.2" />

<tt:CenterOfGravity x="-0.4" y="0.4" />

<tt:Polygon>

<tt:Point x="-0.6" y="0.6" />

<tt:Point x="-0.6" y="0.2" />

<tt:Point x="-0.2" y="0.2" />

<tt:Point x="-0.2" y="0.6" />

</tt:Polygon>

</tt:Shape>

<tt:Class>

<tt:ClassCandidate>

<tt:Type>Vehical</tt:Type>

<tt:Likelihood>0.75</tt:Likelihood>

</tt:ClassCandidate>

<tt:Type Likelihood="0.75">Vehicle</tt:Type>

</tt:Class>

<tt:VehicleInfo>

<tt:Type Likelihood="0.75">Vehicle</tt:Type>

</tt:VehicleInfo>

<tt:GeoLocation lon="-0.000254295" lat="0.000255369" elevation="0" />

<tt:SphericalCoordinate Distance="40" ElevationAngle="45" AzimuthAngle="88" />

</tt:Appearance>

<tt:Behaviour>

<tt:Speed>20</tt:Speed>

<tt:Direction yaw="20" pitch="88" />

</tt:Behaviour>

</tt:Object>

<tt:ObjectTree>

<tt:Delete ObjectId="1" />

</tt:ObjectTree>

</tt:SampleFrame>

The new metadata fields

GeoLocation

- Provides the longitude and latitude with respect to the camera.

- You can enter the camera's actual coordinate and you have the metadata stream the actual coordinate of the moving object.

The GeoLocation is presented like this:

<tt:GeoLocation lon="-0.000254295" lat="0.000255369" elevation="0" />

Spherical Coordinate

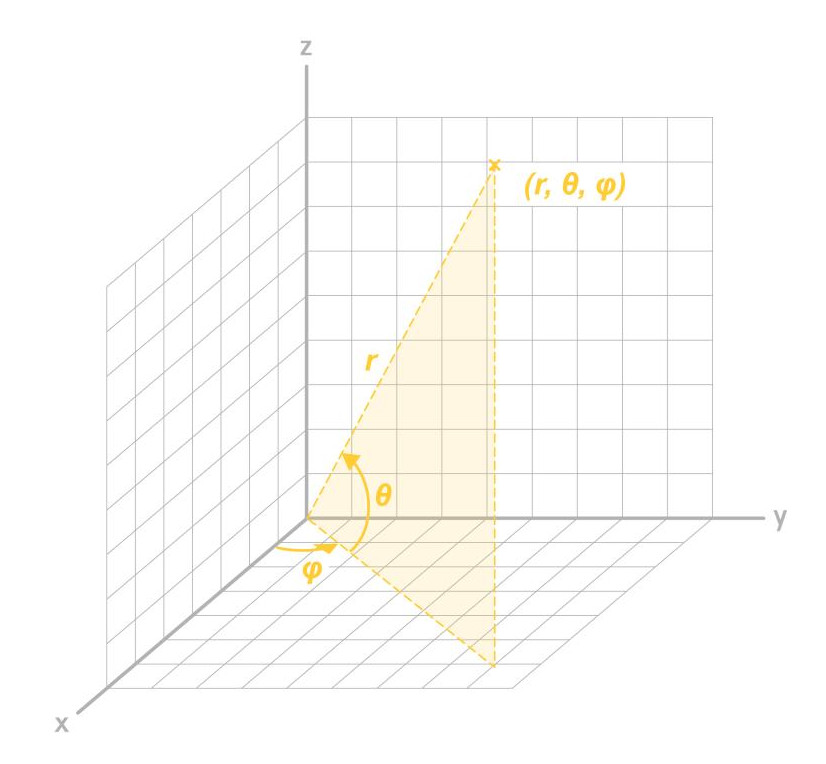

The spherical coordinates system is commonly used in mathematics. It provides the distance, which is the actual distance from the camera to the moving object, Azimuth θ, and Elevation φ measured by the radar.

The Spherical Coordinate is presented like this:

<tt:SphericalCoordinate Distance="40" ElevationAngle="45" AzimuthAngle="135" />

Speed

- The absolute speed of the detected object is measured by the radar.

- All the speed information provided in the metadata is measured by radar technology.

- The unit is meters per second.

The Speed is presented like this:

<tt:Speed>20</tt:Speed>

Direction of movement

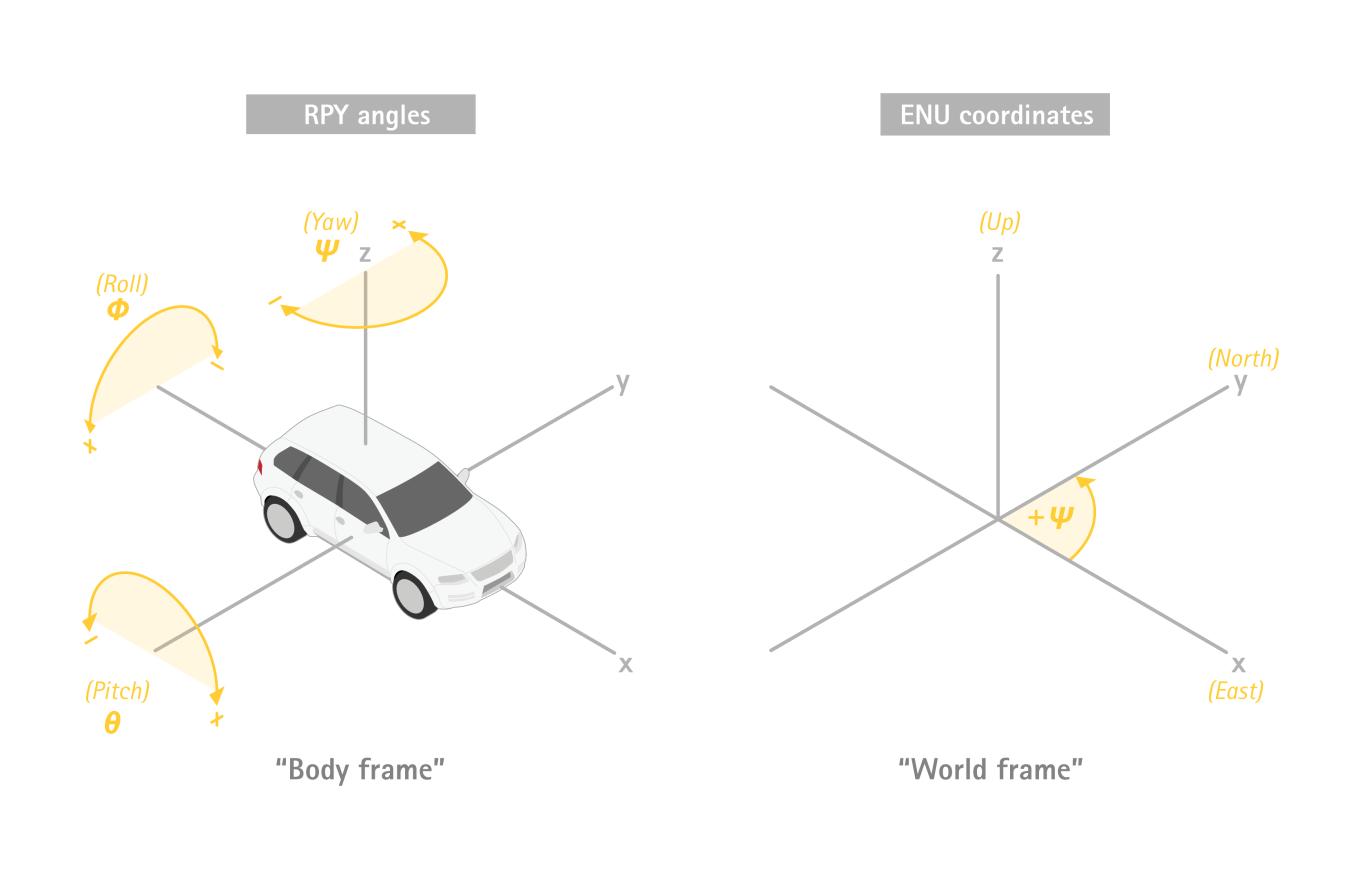

- The direction element describes the direction of movement of an object in the Geolocation orientation with angles Yaw ψ and Pitch θ , provided by the radar.

- The range of yaw ψ, is between -180 and +180 degrees, where 0 is rightward and 90 is away from the device.

- The range of Pitch θ is between -90 and 90.

The direction of movement is presented like this:

<tt:Direction yaw="20" pitch="88" />

Considerations Axis Q1656-DLE Radar-Video Fusion Camera

- The video stream rotation feature is not available.

- Corridor format functionality is not available.

- Multi-view areas will not be possible on this camera.

- No digital PTZ on the camera.