Deep Learning Processing Unit (DLPU) model conversion

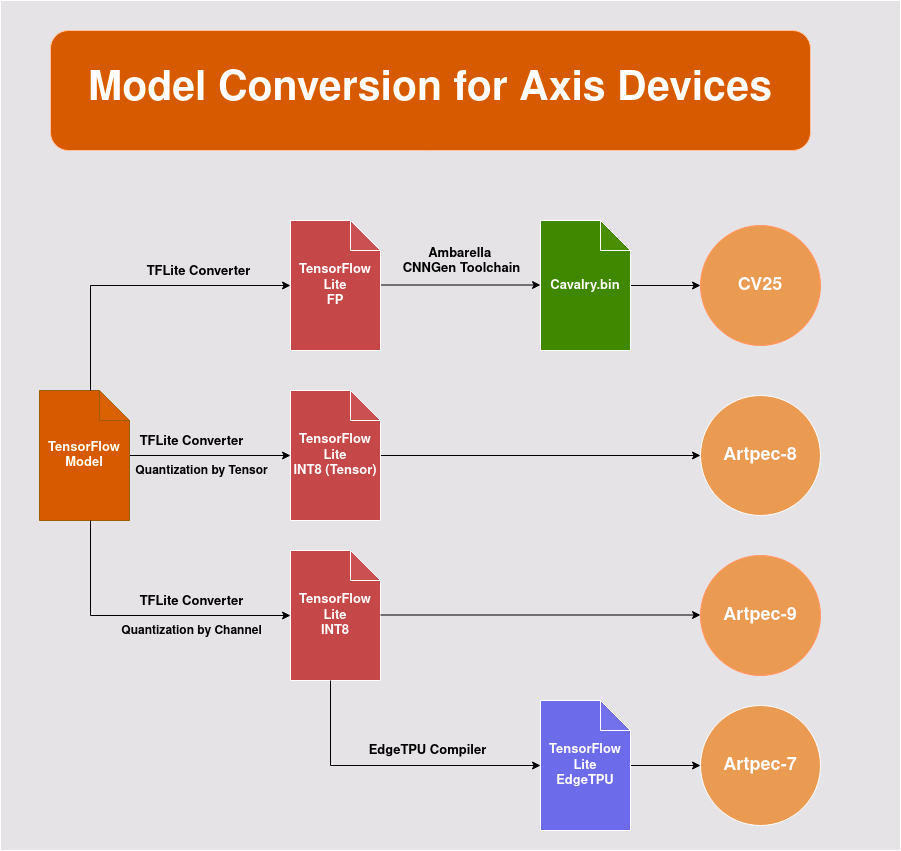

To convert your model for the Deep Learning Processing Unit (DLPU) in your device, you need to follow a specific procedure depending on the DLPU available. Here is a schematic that shows the different steps required for each DLPU:

ARTPEC-7

To convert a model for the ARTPEC-7 DLPU, follow these steps:

- Train a model using TensorFlow.

- Quantize and export the saved model to TensorFlow Lite (tflite) using the TensorFlow Lite converter.

- Compile the model using the EdgeTPU compiler.

Since the ARTPEC-7 DLPU is based on the EdgeTPU, you can refer to the EdgeTPU guide for instructions on converting your model. We recommend the example on retraining and converting SSD MobileNet for object detection. Note that this guide is only for training and converting the model to EdgeTPU. To deploy the model on the device, refer to one of the examples in develop your own deep learning application.

There are also many other open-source examples available on how to train, quantize, and export a model for the EdgeTPU. You can find these examples on the Google Coral tutorials page. Most of these tutorials use TensorFlow 1, which is no longer supported by Google Coral, but they can still be used as guidance and executed locally on your machine by installing TensorFlow 1.

Axis provides another example in the acap-native-examples GitHub repository called tensorflow-to-larod on how to train, quantize, and export an image classification model for ARTPEC-7.

ARTPEC-8

To convert a model for the ARTPEC-8 DLPU, follow these steps:

- Train a model using TensorFlow.

- Quantize and export the saved model to tflite using the TensorFlow Lite converter.

Please note that the ARTPEC-8 DLPU is optimized for per-tensor quantization. You can achieve per-tensor quantization by using the TensorFlow Lite converter with TensorFlow 1.15 or with TensorFlow 2 and adding the conversion flag _experimental_disable_per_channel = True.

We recommend referring to the example on how to retrain and convert SSD MobileNet for object detection. You can find this example here. Please note that you should only follow this guide to train, quantize, and export the model to tflite. You should not convert it to EdgeTPU. To deploy the model on the device, refer to one of the examples in the develop-your-own-deep-learning-application page.

Axis also provides an example on how to train, quantize, and export an image classification model for ARTPEC-8 in the acap-native-examples GitHub repository. The example is called tensorflow-to-larod-artpec8.

We provide a guide on how to convert YOLOv5 to tflite, optimizing it for ARTPEC-8. You can find this guide in the Axis-model-zoo repository.

ARTPEC-9

To convert a model for the ARTPEC-9 DLPU, follow these steps:

- Train a model using TensorFlow.

- Quantize and export the saved model to TensorFlow Lite (tflite) using the TensorFlow Lite converter.

The conversion process for ARTPEC-9 DLPU is very similar to the conversion process for ARTPEC-7 DLPU. The only difference is that you don't have to compile your model using the EdgeTPU compiler. The ARTPEC-9 DLPU supports both per-channel and per-tensor quantization.

We recommend referring to the example on how to retrain and convert SSD MobileNet for object detection. You can find this example here. Please note that you should only follow this guide to train, quantize, and export the model to tflite. You should not convert it to EdgeTPU. To deploy the model on the device, refer to one of the examples in the develop-your-own-deep-learning-application page.

Axis also provides an example of how to train, quantize, and export an image classification model for ARTPEC-9 in the acap-native-examples GitHub repository. It is the same example as for ARTPEC-8, tensorflow-to-larod-artpec8.

We provide a guide on how to optimize and train YOLOv5 for ARTPEC-9 in the axis-model-zoo repository.

CV25

To convert a model for the CV25 DLPU, follow these steps:

Direct conversion from TensorFlow Lite to the CV25 proprietary format is only supported on CNN Gen version 2.7 and earlier. In later versions it is only possible to convert from ONNX format.

- Train a model using TensorFlow.

- Export the saved model to TensorFlow Lite format using the TensorFlow Lite converter.

- Compile the model using the Ambarella toolchain.

Converting a model for the CV25 DLPU is slightly more complex compared to other DLPUs. The toolchain for converting your model is not publicly available. To gain access to the toolchain, please contact Ambarella directly.

Once you have access to the toolchain, refer to their examples on how to convert a model. Axis also provides an example on how to convert a model for the CV25 DLPU in the acap-native-examples GitHub repository, tensorflow-to-larod-cv25.

Differently from the other DLPUs, the CV25 DLPU requires the input to be in the RGB-planar format.