AXIS Scene Metadata

Axis products produce scene metadata — data about what the device sees. This documentation describes how to consume, interface with, and make use of this data when building applications. The provided data can be used in various applications for real-time situational awareness, efficient data searches, and identifying trends and patterns.

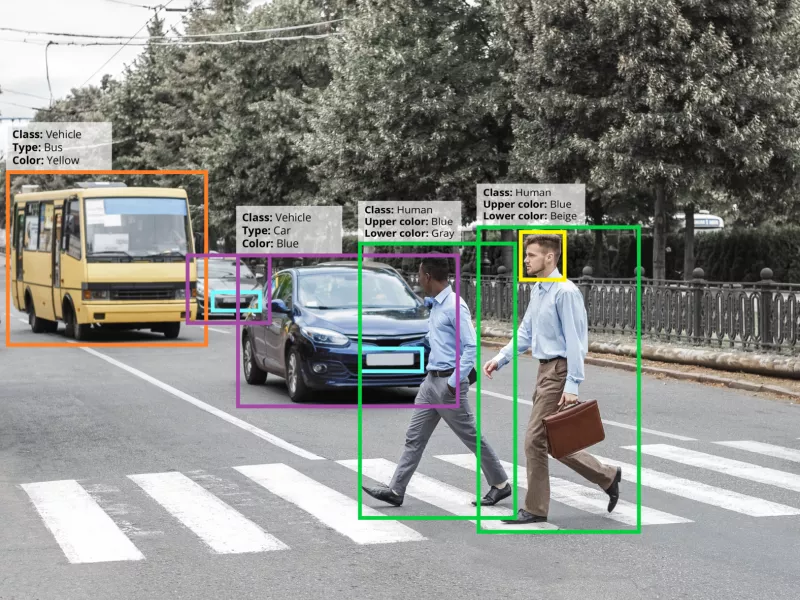

Axis devices such as cameras and radars track and collect information about detected objects, including humans, vehicles, and unclassified movement. Data is available under multiple topics to address different use cases. The table below provides an overview of the topics and their properties:

| Data source | Summary | Availability | Source |

|---|---|---|---|

| Frame-by-frame object tracking | Real-time tracking of objects | RTSP, ACAP SDK, MQTT | Fusion Tracker |

| Consolidated object information | Information about each object is summarized and delivered once the object leaves the scene. | ACAP SDK, MQTT | Track Consolidation |

| Object snapshots | Image snapshots of tracked objects | MQTT | Fusion Tracker |

All topics are encoded in JSON following Analytics Data Format. Most data is also translated into ONVIF conformant XML over RTSP. See the list of data sources for details on each topic, including availability and data schemas.

AXIS Scene Metadata is supported across various devices, including products with multiple sensor types. For instance, multi-sensor devices like the AXIS Q1686-DLE radar-video device combine sensor outputs to deliver a coherent and comprehensive scene understanding.

For a complete list of supported products, visit the compatible products page. Click View More to see the full list.

To get started, see the Getting Started guide.

To read more about central concepts to AXIS Scene Metadata, such as object tracking, processing modules, data framing, etc., see the concepts section.

This documentation describe the AXIS Scene Metadata functionality as of the latest version of the active track for AXIS OS.